How to Easily Add a Robots.txt File to Your Webflow Website

Do you have a webflow website and want to control how search engines crawl and index it?

Adding a robots.txt file is a simple yet effective way to manage this. In this blog post, we’ll guide you through the process of creating and implementing a robots.txt file for your webflow site, without using any complicated technical jargon.

What is a robots.txt file?

Before we dive into the “how,” let’s clarify the “what.” A robots.txt file is a text file that instructs search engine bots (like Google’s Googlebot) on which pages or sections of your website they can crawl and index. It acts as a set of guidelines for search engines, telling them where they can and cannot go on your site.

Add a robots.txt file to your Webflow Website step-by-step

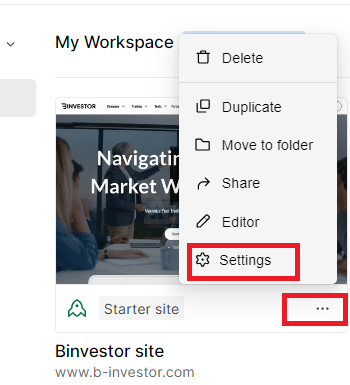

Step 1: Log in to your Webflow account and select your project.

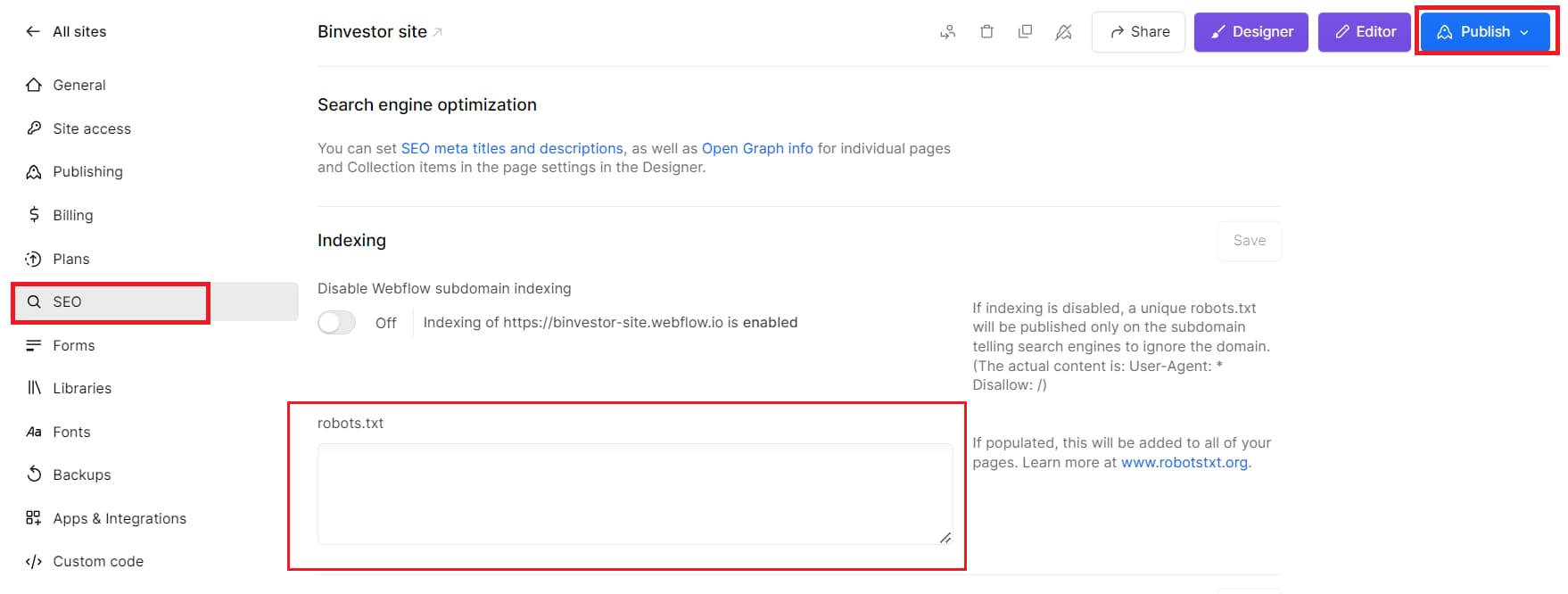

Step 2: Access the “SEO” tab in project settings. Look for the “robots.txt” field.

Step 3: In the “robots.txt” field, type the directives you want to use. Add and save your robots.txt directives, then publish your project.

User-agent: *

Disallow: /private/

Conclusion

Following these simple steps, you can add a robots.txt file to your webflow website. This allows you to control what search engines see and index, making it an essential tool for managing your website’s visibility. Remember that while these instructions are straightforward, you must be cautious with your directives to avoid unintended consequences. Always double-check your robots.txt file and keep it up to date as your website evolves.

Now that you know how to add a robots.txt file to your webflow website, you have a valuable tool to manage your site’s presence on the web. Have fun optimizing your website’s search engine behavior!

What is a robots.txt file for a website?

A robots.txt file is a text document that instructs search engine crawlers which parts of a website should or should not be indexed.

Why should I add a robots.txt file to my webflow website?

Adding a robots.txt file allows you to control how search engines access and index your site, improving SEO and privacy.

What are common robots.txt directives?

Common directives include “User-agent” to specify which bots to control and “Disallow” to indicate which parts of the site should not be crawled.